Final Year Project

My Final Year Project is titled 'Using A Game Engine and Procedural Generation to Visualise Audio'.

For this project, I investigated how games such as Audiosurf and rhythm game beatmap generators process audio data and create a visual 'beatmap' for users to play. Map/Level creation for rhythm games is typically done manually by the creators of the game or by members of the community through modified applications. However, Audiosurf is a game which generates levels automatically, and some map creators use AI or other techniques to do the same.

A Game Engine has not been used for this purpose, ever. The closest use that I could find were some audio visualisers which displayed an entire graph for the whole audio using Unity's function. Therefore, it was difficult to complete as there were not many points of reference; my closest ones were interpretations made in Python/Matlab.

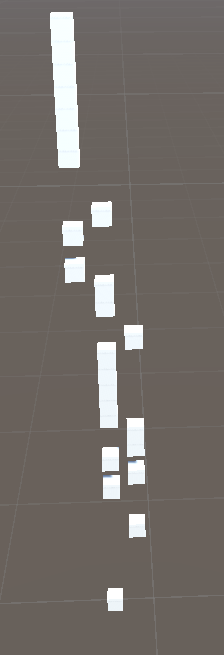

For this project, I had to learn a lot of background knowledge. I learned about and implemented the Fourier Transform with complex numbers and attempted to implement Audio Source Separation using Independent Component Analysis. I then used my own ideas to visualise this data in a similar style to the popular mobile game Piano Tiles as a form of Procedural Generation.

When calculating the success of the project, I decided to use a scoring system to measure how well my program could visualise different audio files of different instruments. Overall, the project was a success but had a LOT of room for improvement. In addition, one would need a device with VERY GOOD specifications/parts, as my computer did not have enough processing power to successfully calculate audio separation inside of a game engine.

Here is an example of my project, the image on the right represents this audio:

Here is a demo video of my project using some audio files.